Three edge computing trends and why you should care

Edge computing is a distributed information system that processes data on the periphery of a network, hence the term ‘edge’. The history of the internet has been built upon centralized systems and data centers; however, the growing river of real-time data makes these systems antiquated. Bandwidth limitations, latency issues, and network disruptions can render operations redundant and incomplete. Essentially, what edge computing does is that it moves some portion of the storage or computation process closer to the source of the data itself.

Data is not sent to a central processing center. It is instead processed and analyzed where the data is generated. This is done at a series of “nodes”. Think of a node as a vertex on a graph in a computer network. By establishing nodes throughout a certain area, or on IoT devices themselves, data processing can be ‘outsourced’ to these devices instead of sent back to data centers to be processed there. This has several benefits that can be seen across multiple industries today.

In fact, several trends are emerging as edge computing has become ubiquitous. Because some of these trends can transcend specific industries, let’s look more broadly at what some of the latest trends in the industry are, how IoT is and will be affected by their deployment, and what you can take away from them to actualize in your business moving forward.

Trend 1 – Containerization

What is it?

Think of it this way: software needs to be compatible with the hardware it is operating on. It needs certain instruction sets to work within the parameters of an OS. If you’re attempting to run something at scale, the differences in code across devices may be a hindrance to the rollout of your product and/or code. Enter edge containers, units that can be deployed to any device and built using any architecture. Essentially, containers bypass this by putting software in a ‘box’ and running it independently within the container which can then run on any piece of hardware, regardless of compatibility if the container itself is compatible. This allows software to scale more easily as the tech ecosystem becomes more diverse. Being able to run a container on an edge node allows you to distribute packages and software to make it compatible across devices at scale. Amazon is an excellent example of this.

Use cases

Docker can package any application and its dependencies into a virtual container that allows it to run on any OS. Kubernetes can also be used in a variety of edge computing contexts. It is likely to become more important to edge computing as the popularity of extending workloads to the edge grows. Kubernetes is an architecture that offers a loosely-coupled mechanism for service discovery across a computing cluster.

Market opportunity and benefits

In a 2020 survey conducted by the Cloud Native Computing Foundation, 84% of respondents said they were running containers in production. Containers are a standard unit of deployment for data currently, but will only continue to grow as businesses seek to launch their products across multiple platforms simultaneously without the headache of independently coding for each specific platform. The market for containers was roughly $8 billion USD in 2021 and is growing at a 12% CAGR.

Benefits of containers

- Faster time to market for software products - due to a more streamlined process it takes seconds to create, start, replicate or destroy a container.

- Standardization and portability - you can run containers across different clouds, avoiding vendor lock-in.

- Cost-effectiveness – you can deploy only what you need to in edge containers and simplify your code.

- Security - As a result of the isolation that containers have from each other, security is naturally baked in.

Trend 2 – Content Delivery Networks

What is it?

This is where edge computing started, but it still plays a key role in its rollout today. Beginning in the 90s, CDNs were developed to help with performance bottlenecks on the internet. Edge nodes are scattered across a geographic location to minimize request loads to a centralized data center by localizing content and data processing in the area. Nodes can cache data in the area closest to it to deliver regularly requested content/data faster and more reliably. Nodes can also store data that is most popular in an area so that it can be accessed without having to query the central data center.

Any service-based application lives and breathes on content delivery networks and edge nodes. Because IoT devices create so much data, it is necessary to outsource the processing of this data to nodes that can isolate certain requests and deal with them locally to improve quality and reduce latency. Without edge nodes, these services wouldn’t be possible. Amazon Web Services offers edge nodes across the country that businesses can utilize to deploy their service. As IoT becomes more ubiquitous, content delivery systems will be increasingly necessary and edge computing is and will be at the forefront of both product localization and delivery.

Use Cases

Advanced in CDNs make it easier to deploy machine learning locally to empower real time applications for smart campuses, such as universities, logistics hubs, buildings, factories, airports, and retail stores. Virtual and augmented reality entertainment services offered by providers like Steam, GamePass, and Stadia will also be able to leverage lower latency to improve the gaming experience for their customers.

Market Opportunity

The CDN market was valued at USD 12 billion in 2020 and is expected to reach USD 50 billion by 2026 with a CAGR of 27%. As the tail end of the pandemic continues to unfold, content delivery networks will continue to be at the forefront of how businesses deliver their products and how it is consumed. Localized markets are increasingly valuable to service-based businesses looking to capitalize on content that is tailor-made for swathes of the population that is stored, processed, and disseminated locally to cut down on costs and latency issues.

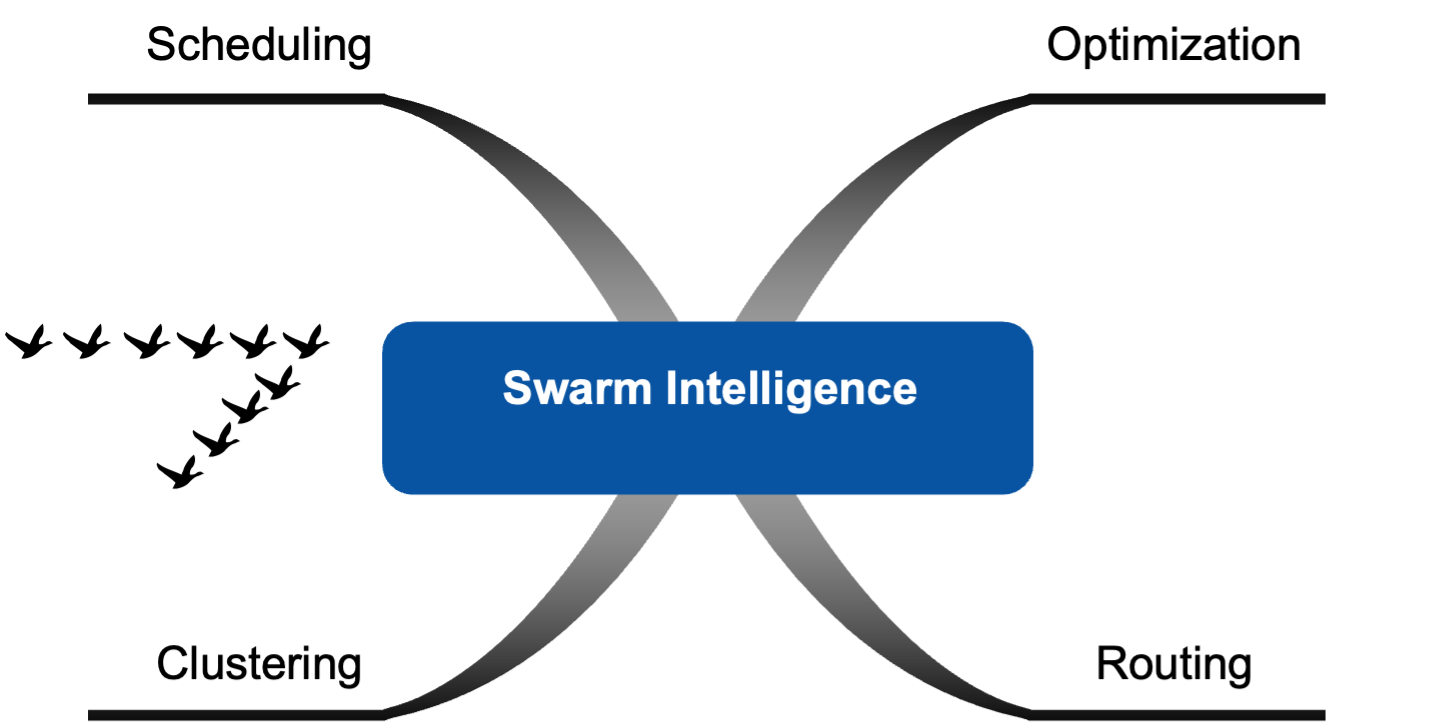

Trend 3 – Swarm Intelligence

What is it?

Swarm intelligence (SI) is a variant of AI that hinges on collective behavior within a decentralized system. Because IoT is a complex system, it requires large swathes of data to be sorted through using powerful algorithms to deal with complex problems. In the past, this data would be sent to a data center to be analyzed and parsed; however, SI or ‘intelligent edge’ can aggregate data locally with edge devices of the same level to communicate with each other and transmit information without a control layer. The devices can act as a sensor, actuator, and processor – an all-in-one solution to reduce reliance on control layers like cloud platforms and data centers.

As field and edge devices get smarter, they can gather data, make decisions, and act on these decisions independently. Communication with other devices at the same level helps them get the information they need to make these decisions. Machine learning at the cloud level will become increasingly expensive as data grows, so it makes sense to process data locally utilizing the interconnectivity of IoT and SI to make decisions and solve complex issues and reduce latency which key emerging sectors will rely upon.

Use Cases

Swarm intelligence has applications in a wide range of scenarios that involve discrete objects that operate in relation to each other. For example, vehicle-to-vehicle communication about road status, routing, breakdowns can improve the overall efficiency of the transport system by leveraging the data and computing power of vehicles in the network. Other SI use case examples include autonomous AGVs or drones to transport goods and self-optimizing telecommunication networks.

Market Opportunity

The swarm intelligence market is small but is projected to grow at 41% CAGR from 2020 to USD 447 million by 2030. It has applications in robotics, self-driving cars, aggregate voting data, commercial and political trends, factory production, and more. As devices become more interconnected, it makes sense to lean into local data aggregation to solve issues that require limited latency.

Digital Asia Point of View

Many multinational companies are only now localizing their engineering capabilities in Asia. As a result, few companies have local capabilities in connectivity, embedded computing, and AI needed to localize solutions for Asian markets. Technology partner ecosystems can enable companies to accelerate solution localization by filling technical gaps. Product portfolios can also be enhanced by cross-selling with edge computing applications provided by local technology companies.

Effective ecosystems are structured around win-win partnership programs, user-friendly marketplaces, and communities of developers and customers who share expertise and best practices. The companies with the strongest ecosystem tend to win in markets that favor comprehensive portfolios and agility in adapting to changing market conditions. Traditional automation companies that focus on selling hardware rather than software-enabled solutions will likely struggle to maintain margins as their categories become commoditized. Software and ICT companies tend to be better able to deliver integrated solutions.

We recommend adopting a strong “customer/partner-first” perspective when developing an ecosystems strategy. Self-serving ecosystems do not scale. This means ensuring that all stakeholders have clear wins. This approach is not always natural to industrial companies that have thrived by building walled gardens in the past. But with protocols like OPC UA gaining traction and new digital native competitors entering markets, the time is now to consider an ecosystem-driven approach to building edge computing competence in Asia.

Key Takeaways

Whatever industry you find yourself in, it’s clear that edge computing will have an impact on your ability to optimize internal operations and improve services to customers. As the Internet of Things continues to dominate the technosphere, localized processing will become increasingly vital to its evolution. Everything from the entertainment we consume, to the vehicles that get us from point A to B, to the software that automates industrial processes – almost every aspect of commercial and economic development will be touched by edge computing in the coming years.

However, what is also increasingly clear is that there is opportunity in utilizing and implementing edge computing into your business strategy today. Maybe you’re a long-haul shipping company that is looking into autonomous vehicles to cut down on labor costs, or the head of a political campaign looking to explore swarm intelligence and CDNs to micro-target potential voters. The possibilities are endless with how edge computing can affect your business.

The key takeaway here is that if you’re not thinking about how edge computing can improve your business, you should be.

Everyone else is.

Related Insights.